Introduction

In the complex digital landscape, content moderation plays a vital role in ensuring the safety and integrity of online platforms. This crucial task involves numerous human moderators who tirelessly review user-generated content on services like Facebook, Twitter, and YouTube. Despite their significant contribution, moderators often work behind the scenes, facing the challenging duty of keeping virtual spaces free from harmful information and materials. Regrettably, in specific circumstances, these particular employees may face mental well-being risks while striving to maintain the required security levels for users.

This unseen effect on the moderation effort —mental health implications— can be profound, with daily exposure to disturbing content taking a toll on the individuals’ well-being, leading to various psychological effects. They may endure symptoms such as heightened stress levels, emotional exhaustion, and long-term distress, similar to those experienced in trauma-related occupations. The industry’s response to this severe threat is under increasing scrutiny. While some social media providers have already taken steps to address these issues, the focus tends to lean more towards content regulation than the well-being of the moderators. This reveals a crucial gap in the digital ecosystem: the need for a comprehensive approach, like managing the content and prioritising human wellness simultaneously. Otherwise, even the best compliance strategies, sophisticated regulations, and strong policies will not be effectively applied when supported by mentally and emotionally exhausted employees.

Mental health issues across various industries

Moderators across various sectors encounter significant challenges that can affect their mental well-being. The extent of vulnerability is influenced by factors such as individual resilience, the level of support provided by employers, and the nature of the content moderators are dealing with. Overall, it becomes evident that this type of job is constantly related to diverse threats and risks that can have severe consequences. The sheer volume of content and the need for rapid decision-making additionally exacerbate stress levels in this fast-paced environment.

Here is a comprehensive overview highlighting the unique characteristics of moderation jobs in selected domains, focusing on specific challenges individuals face in their diverse roles:

- Social Media Platforms: This sector involves handling a wide range of distressing content, including hate speech, violence, self-harm, and explicit material, which can take a toll on their mental well-being. The constant influx of such materials and quick decision requirements intensifies stress levels.

- Online Gaming Communities: In this domain, moderators often deal with intense and frequent harassment, verbal abuse, and threats from users, affecting their mental health. The 24/7 operations of gaming platforms contribute to irregular work hours and potential disruptions to individuals’ personal lives.

- News and Media Websites: Moderators reviewing user-generated comments on news articles may encounter graphic content, violent discussions, and extremist views, impacting their mental well-being significantly. The need for real-time monitoring and quick decisions in the news industry adds pressure to everyone.

- E-commerce Platforms: Overseeing here is related to filtering customer reviews and complaints, which may expose moderators to fraudulent activities, scams, and disputes, leading to increased stress. Dealing with buyer dissatisfaction, complaints, and product-related issues can contribute to emotional strain.

- Healthcare Forums: In this area, moderators may come across sensitive health-related content, including discussing illnesses and personal struggles, which can be emotionally taxing. Providing empathetic support to individuals facing health challenges may lead employees to absorb the burden of community members, resulting in anxiety or depression.

- Dating and Relationship Platforms: This domain may entail exposure to users sharing personal conflicts, breakups, and emotional distress, impacting the moderators’ peace of mind. Managing discussions around sensitive topics like mental health, abuse, and relationship struggles can be exhausting in the long term.

- Education Platforms: Moderators overseeing educational platforms face challenges related to academic dishonesty, plagiarism, and student disputes, increasing frustration and stress.

Innovative solutions and best practices to mitigate the threat

What is important to emphasise again and again is that during their everyday work, moderators are exposed to a vast array of disturbing content, including violence, hate speech, and pornography, which reinforces a growing call for the industry to adopt more holistic practices. This entails incorporating robust mental health support systems and ensuring a work environment that acknowledges and mitigates the psychological risks. Therefore, various solutions and best practices are being developed and implemented.

Below are noteworthy initiatives and advancements aimed at fostering the mental health of content moderators:

- Employee Assistance Programs (EAPs): Many companies, including Conectys, implement Employee Assistance Programs that offer confidential counselling and mental health support services. These initiatives can be valuable resources for moderators facing challenges, providing access to professional assistance beyond the workplace.

- Community Building Events: Organising team-building events or wellness activities can foster a sense of community among employees. They can provide an opportunity for relaxation, bonding, and mutual support outside the usual work context, positively boosting mood and welfare.

- Mental Health First Aid Training: Providing moderators with Mental Health First Aid training can empower them to recognise signs of distress in their colleagues and offer initial support. This peer-to-peer aid system can strengthen the team’s mental health support network.

- Stress Management Workshops: It is also worth organising workshops or training sessions tailored explicitly for content moderators, equipping them with practical techniques to cope with stress and enhance their resilience.

- Holistic Wellness Programs: Additionally, expanding wellness programs to include holistic approaches, such as yoga, mindfulness sessions, or nutritional guidance, can contribute to moderators’ overall well-being by addressing physical and mental health.

- Anonymous Reporting Systems: Implementing anonymous reporting systems allows moderators to express concerns or report distressing incidents without fear of reprisal. This promotes a culture of transparency and trust, encouraging individuals to seek help when needed.

- Mental Health Check-ins: Routine mental health check-ins, conducted through surveys or one-on-one discussions, become standard practice. This proactive approach enables early issue identification and timely support for moderators facing mental health challenges.

- Regular Feedback Channels: Establishing common feedback channels for moderators to share their thoughts and concerns helps companies continually refine their support programs. It also gives moderators a voice in shaping the initiatives designed for their well-being.

- Access to Mental Health Professionals: Companies may facilitate access to mental health professionals for moderators who require more specialised support. This can involve partnerships with counselling services or financial assistance for external mental health resources.

- Workload Management: Ensuring realistic workload expectations and manageable caseloads for moderators is crucial. Companies can implement strategies to prevent burnout, such as workload assessments and redistribution when necessary.

- Flexible Work Arrangements: Digital services adopt flexible schedules, allowing moderators to align with personal well-being needs. This approach acknowledges diverse lifestyles, promoting a healthier work-life balance for all.

- Elastic Leave Policies: Offering flexible leave policies allows moderators to take time off when needed without additional stress about job security. This flexibility supports employees in maintaining a healthy work environment.

- Continuous Learning Opportunities: Providing opportunities for continued learning and skill development beyond initial moderation training can empower the personnel and enhance their professional growth and accomplishment.

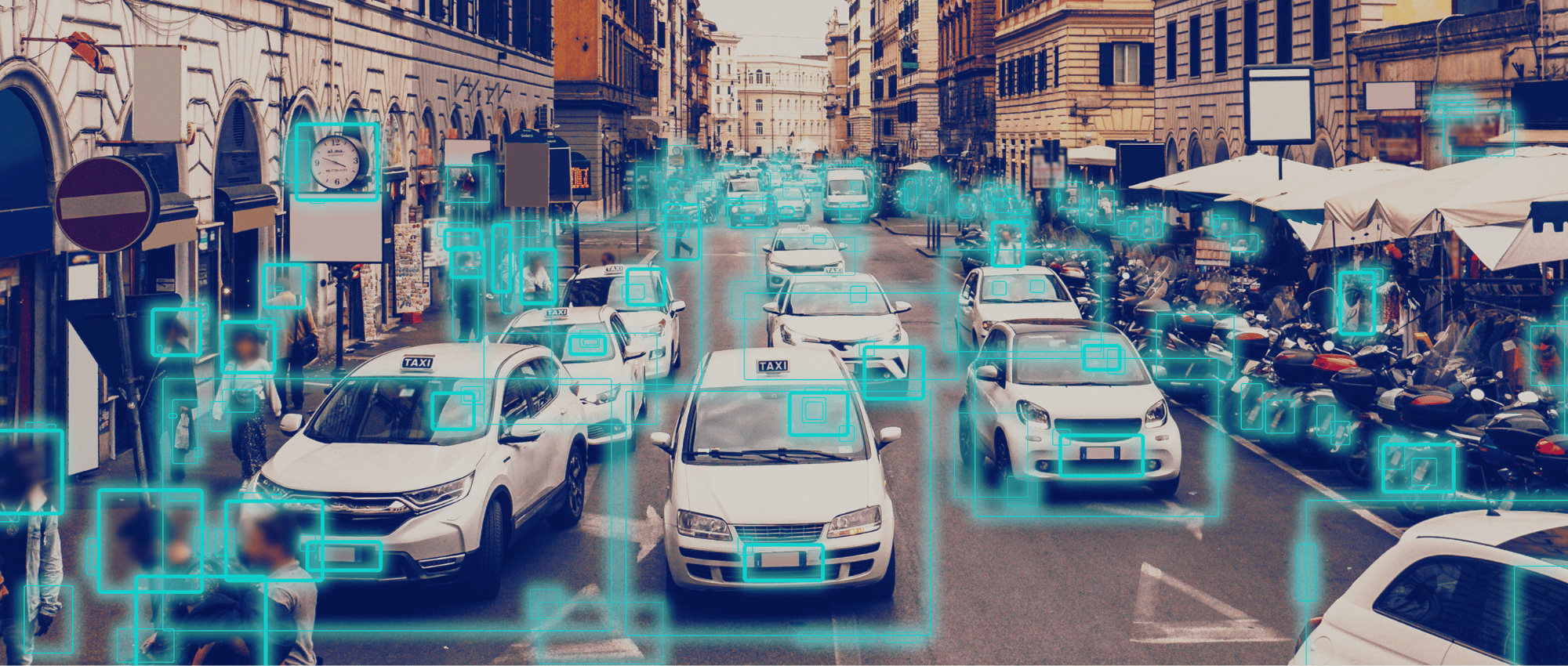

- Technological Innovations in Moderation: Technology integration in content moderation more frequently uses advanced AI tools, like image blurring and resising, to reduce the intensity of content and potential harm. This helps mitigate psychological risks, safeguarding improved wellness and satisfaction.

- Wellness Apps and Platforms: Companies are incorporating wellness applications into daily routines, providing moderators with accessible resources for meditation, stress relief, and mental health management.

- Cultural Considerations and Tailored Support: Implementing a flexible program framework that respects cultural differences is increasingly common. Training life coaches in basic psychological first aid complements the expertise of in-country mental health professionals, ensuring a healthier environment.

- Emphasising the Greater Purpose: Continual emphasis on the meaningful work of moderators as the first line of defence against harmful content contributes significantly to their mental well-being. Integrated group and individual sessions and onboarding and training practices reinforce moderators’ positive role in online safety.

The role of humans in the moderating processes

In content moderation, thousands of individuals in places like India, the Philippines, the U.S., Romania, and Poland play a vital role in filtering user-generated content. They make quick decisions on diverse materials, dealing with ambiguous guidelines and cultural differences. Although embedding artificial intelligence in their routine is a growing trend, offering opportunities for enhancement, like automation and scalability, the human touch remains irreplaceable in many cases.

Real-life moderators bring empathy, cultural sensitivity, and an understanding of nuanced language that AI might miss. Their advantage is crucial for interpreting subtle sarcasm and humour when handling content that does not neatly fit predefined rules. This helps prevent misunderstandings and potential offence, especially across languages and diverse cultures. For instance, when a user posts content that includes a sarcastic remark, skilled and educated humans can easily recognise its intent and remove such information to avoid potential threats to specific minority groups. In contrast, an AI-driven tool may approve such materials, as its capability to discover the more profound sense is not at its peak. It all underscores the significance of investing effort and resources in creating the best possible conditions for moderators, acknowledging their necessity and value, and recognising their key role in the long-term perspective. Affirming their pivotal position in ensuring the safety of the virtual ecosystem should be a mantra for all digital platforms determined to fortify their commitment to sustained safety and foster a community based on responsible and considerate digital interactions.

Industry responsibility and public awareness

The journey towards a more sustainable and mentally healthy moderation environment is complex and critical. It requires a multifaceted approach that includes industry leadership, public awareness, collaborative efforts, advocacy, and education. By addressing these areas, the digital community can ensure that the initiative effectively safeguards online spaces and protects the mental health of those who perform this essential work. Heightened public scrutiny, as seen in documentaries and investigative journalism, is pressuring industry leaders to reevaluate their practices. This awareness is crucial in driving change and ensuring accountability, including:

- Industry Responsibility: There is an urgent need for industry leaders to adopt standardised best practices for content moderation. This entails implementing advanced technological solutions and establishing comprehensive mental health support systems for moderators. As the digital landscape evolves, so must the strategies to protect those who work behind the scenes.

- Collaborative Efforts: The potential for collaborative efforts between companies, nonprofits, and government agencies is immense. These partnerships can lead to the development of sector-wide standards, shared resources for mental health support, and innovative approaches to content moderation. Collaboration can also facilitate research into the long-term effects of the initiative, leading to better practices and policies.

- Advocacy and Policy Change: Advocacy groups and policymakers are critical in this ecosystem. They can influence legislation and industry standards, ensuring that the mental health of content moderators is not sidelined. Engaging in advocacy and lobbying for policy change can lead to more stringent regulations and guidelines for digital platforms.

- Education and Training Initiatives: Ongoing education and training initiatives are essential for moderators and the wider industry. These initiatives can focus on best practices, mental health awareness, and resilience training. The sector can foster a more informed and empathetic approach to content moderation by educating all stakeholders, from executives to entry-level employees.

Conclusions: What is worth remembering

In conclusion, forging a sustainable and compassionate approach to content moderation is an essential evolution in the digital age. Achieving this requires a united effort from key players: industry leaders, moderators, advocacy groups, and policymakers. The focus is establishing an environment where moderators’ mental health is acknowledged and prioritised. The future of content moderation depends on finding a balance that values human well-being as much as online safety. It demands a collective awakening within the industry to the challenges faced by moderators, committing to practices that support and sustain them. This involves embracing technology, raising public awareness, advocating for policy changes, and fostering collaborative efforts across sectors. Ultimately, the aim is to redefine content moderation, making it a role that safeguards both digital spaces’ integrity and the mental well-being of its guardians. By taking these steps, the industry can ensure that content moderation becomes a sustainable profession that fully respects and supports its workforce. This isn’t just a duty. It is crucial for the health of our digital communities and the individuals dedicated to maintaining them.