We’ve been hearing quite a bit about the “metaverse” recently.

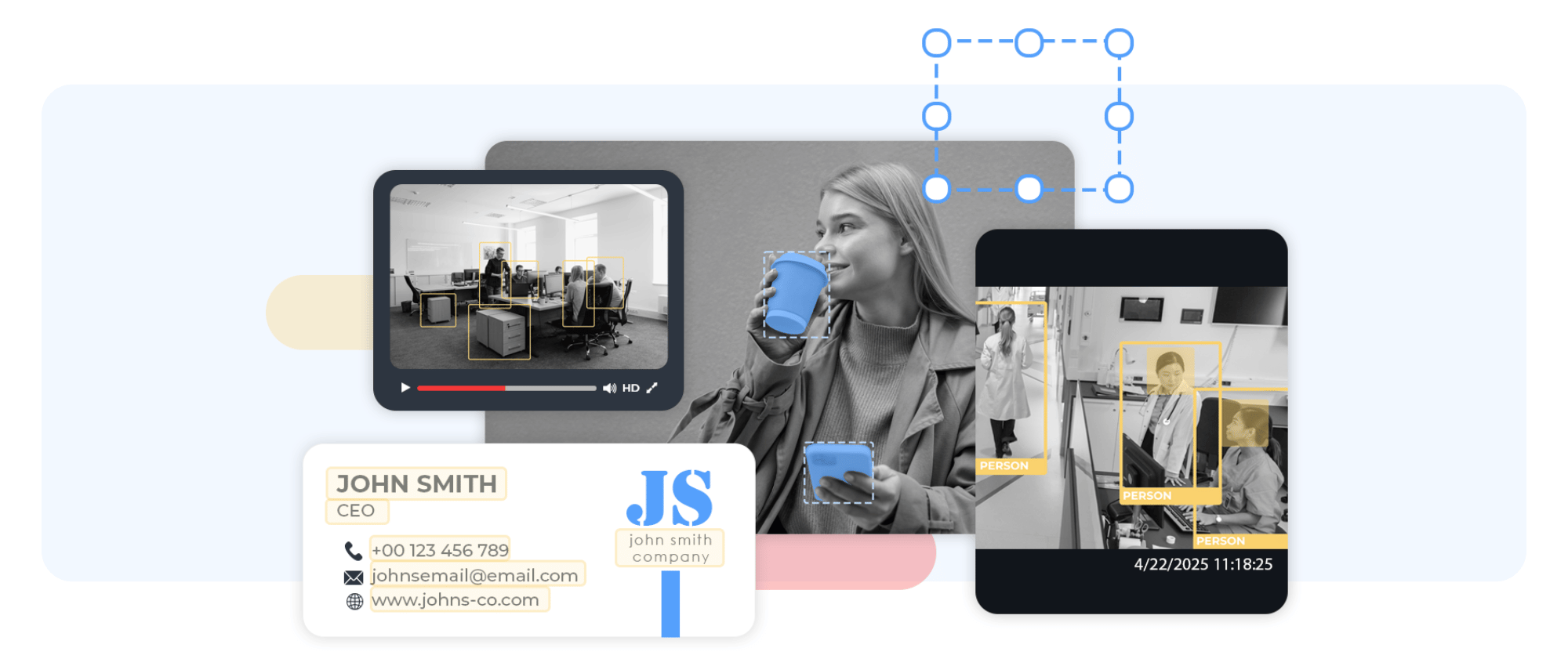

Microsoft has one version planned for early 2022, and Facebook has already launched elements of it as “the successor to the mobile Internet.” There are different definitions and approaches to what exactly the “metaverse” is, but essentially it will be an avatar-driven series of interconnected digital spaces — think virtual or augmented reality, at first — where you can do things you couldn’t do in the physical world, such as, well, sword fighting with a duck.

In terms of the metaverse being at-scale, i.e. millions regularly using it, we are significantly off from that time-wise. 2024 or 2025 seem realistic. Now, Facebook does have products, like Horizon Home, a social platform to help people create and interact with each other in the metaverse. There are also Horizon Venues, which essentially would allow you and a friend to attend a concert or sporting event together, even if you’re not in the same physical space.

There’s a lot we don’t know, and one of the biggest aspects we still need to understand is this: How exactly will the metaverse be moderated? This is a huge question right now because content moderation of social media platforms is a major legislative topic in virtually every G20 country at present. If the base platforms are having concerns around moderation, and still navigating the human vs. AI question in terms of scaling moderation, then introducing the metaverse only creates new moderation complexities.

So, what do we possibly know about moderating the metaverse?

Well, the not-so-good news for users upfront: Andrew Bosworth, the current CTO of Meta (recently re-branded from Facebook) says that content moderation in the metaverse is “virtually impossible.” Nevertheless, the company has been meeting with a series of think tanks to determine the best safety protocols for the metaverse. And it’s important that we, as consumers of the Internet and ideally protectors of the Internet, come to some idea of effective metaverse moderation before we launch more and more metaverse features. As Lawfare Blog explains:

But increased immersion means that all the current dangers of the internet will be magnified. Today’s relatively crude virtual and augmented reality devices already demonstrate that people react to the metaverse with immediacy and emotional response similar to what they would experience if it happened to them in the offline world. If someone waves a virtual knife in your face or gropes you in the metaverse, the terror you experience is not virtual at all. People’s brains respond similarly when recalling memories formed in virtual reality and remembering a “real”-world experience; likewise, their bodies react to events in virtual reality as they would in the real world, with heart rates speeding up in stressful situations.

Some of the challenges we’ve seen in moderating large platforms could thus actually be WORSE emotionally in the metaverse. We need the right guardrails.

It’s likely at this stage that the first wave of metaverse content moderation will be similar to current approaches to moderation. There will be a mix of tech and human, with AI taking the lead on the tech side to flag the more obvious and egregious offenses. It’s likely that human agents would have an avatar of their own, and potentially even enter a metaverse portal — say, a fight between two people, or a medieval kingdom — and moderate that way. The moderator avatar might operate as “deus ex machina,” appearing from above in the game or scenario and explaining how another avatar has violated the rules or terms of service.

There’s a lot we don’t yet know, but we know that it at least seems the Internet’s next iteration might be these immersive worlds, and we need to make sure these worlds will be safe for the users that choose to embark on adventures within them.

Read more about gaming content moderation and find out how to do it better.